How to Know If a Company Uses Your Art to Train Generative AI Models

Summary

This article discusses the ethical and legal concerns surrounding the use of artists’ works by companies to train generative AI models. It explores copyright law, the debate over fair use, and the implications of AI-generated derivative works. Artists face challenges in determining if their work is used without permission, with potential impacts on their rights and livelihoods. The piece highlights ongoing legal battles and the complex interplay between innovation and intellectual property rights.

Reflection Questions

- How do you feel about the use of copyrighted artistic works to train AI without the artist’s permission?

- What measures can artists take to protect their works in the age of AI?

- How do you think the legal system should balance between encouraging innovation in AI and protecting artists’ copyrights?

Journal Prompt

Reflect on your stance regarding AI’s use of copyrighted works for training. Consider how this issue affects your view of AI technology and its ethical implications. Write about the steps you might take to ensure your own works are protected or how you might contribute to the broader conversation on this topic.

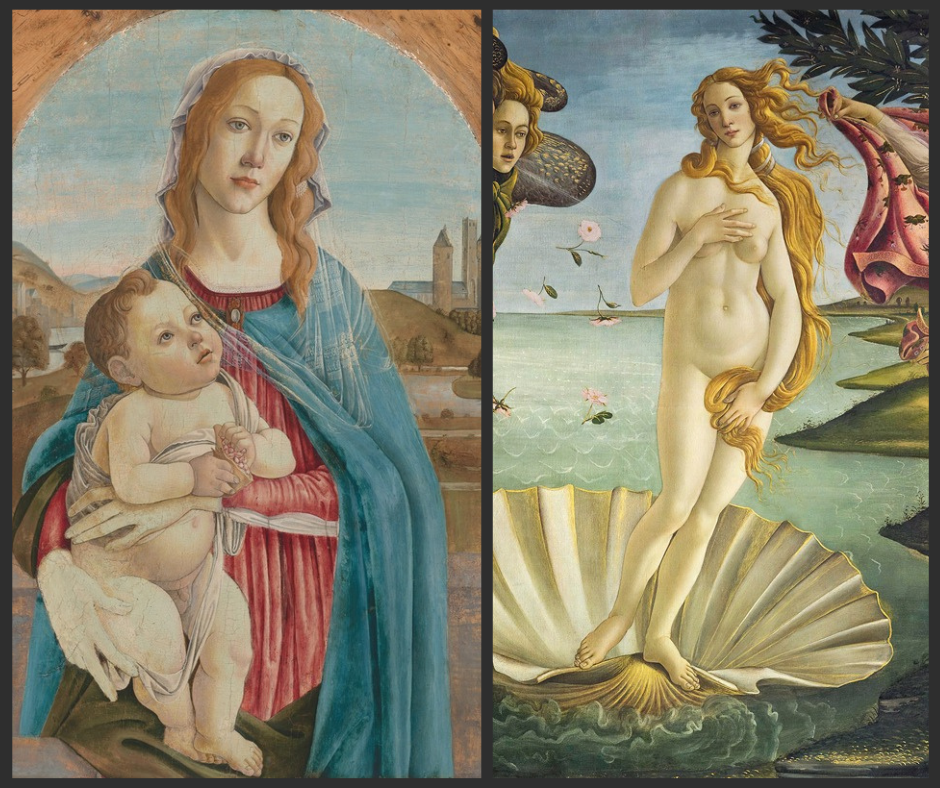

Copyright infringement concerns involving AI companies primarily revolve around the way AI models, particularly those specializing in generating content (like images, text, or music), are trained. Training these sophisticated models typically requires vast amounts of data. In some instances, the data used includes copyrighted works—ranging from visual art and photographs to written text and music compositions—without the express permission of the original creators. This practice has sparked legal and ethical debates, leading to allegations of copyright infringement by artists, photographers, and other content creators. Would you let a company use your work to train AI models? How would you know that an AI model used your work without your permission? Let’s discuss the current state of AI copyright law and its implications for artists.

What Does Copyright Law Currently Say About AI Tools Relying on the Creative Input of Human Artists?

Intellectual property law, as it pertains to AI models using copyrighted material for training, is currently a complex and evolving area without a clear, universally accepted stance. The rapid development of AI technologies has outpaced the existing legal frameworks, leading to a legal gray area that courts, lawmakers, and international bodies are still trying to navigate. The main issues revolve around copyright infringement, fair use (or fair dealing in some jurisdictions), and the creation of derivative AI art. Let’s take a closer look at the current situation.

Copyright Infringement

Copyright law protects original works of authorship, including literary, dramatic, musical, artistic works, and certain other intellectual works. When AI models use copyrighted material without authorization or license agreements for purposes such as training, it raises the question of whether this constitutes copyright infringement.

Currently, there’s no consensus on whether data mining and the ingestion of copyrighted materials by AI for training purposes alone violates the Copyright Act, partly because this use is not explicitly addressed in most copyright laws. Works generated by AI based on these copyrighted materials are an entirely different animal, especially if they bare substantial similarity to the original artistic work.

Fair Use and Fair Dealing

Fair use (in the U.S.) and fair dealing (in other jurisdictions like the UK and Canada) are doctrines that allow limited use of copyrighted material without permission from the copyright holder, typically for purposes such as criticism, comment, news reporting, teaching, scholarship, or research. The application of fair use to AI training involves considering factors such as the purpose of the use, the nature of the copyrighted work, the amount and substantiality of the portion used, and the effect of the use on the market for the original work. However, whether AI training falls under fair use is highly contentious and depends on the specifics of each case.

Fuel your creative fire & be a part of a supportive community that values how you love to live.

subscribe to our newsletter

*please check your Spam folder for the latest DesignDash Magazine issue immediately after subscription

Derivative Works

AI-generated content that closely resembles copyrighted material can be considered a derivative work, which usually requires permission from the copyright holder. The legal challenge arises in determining when an AI-generated output crosses the line from being inspired by to directly deriving from copyrighted material.

Why Do AI Companies Use Copyrighted Work Produced by Artists Without Their Permission?

AI companies may hesitate to enter into licensing agreements with artists for the use of their work to train AI models due to several factors, including cost, scale, and practicality concerns. Despite these challenges, there is growing pressure on AI companies to address copyright concerns more proactively, either through licensing agreements or by finding innovative solutions that respect creators’ rights while still advancing AI technology. The evolving legal landscape and ongoing lawsuits may prompt changes in how AI companies approach the use of copyrighted materials in the future.

Cost: Licensing content from artists and creators can be expensive. AI models, especially those used for generating images, text, or other forms of media, require vast amounts of data to learn and produce high-quality results. Negotiating and paying for the rights to use a large volume of copyrighted works could be prohibitively costly for many AI developers, especially startups and open-source projects.

Scale and Volume: The scale of data needed to effectively train AI models is immense. An AI system might need to analyze millions of images, texts, or other data types to understand patterns, styles, and techniques. Reaching licensing agreements for such a massive amount of content with thousands or even millions of individual copyright holders is not only logistically challenging but also time-consuming.

Fragmentation of Rights: Copyright ownership can be fragmented, with different parties holding rights to various aspects of a single work. For example, a single photograph might have separate rights holders for the image itself, the subjects in the image, and any copyrighted elements appearing in the photo. This fragmentation makes it difficult to ensure that all necessary rights are secured through licensing agreements.

Lack of Clear Legal Framework: The legal framework around AI and its use of copyrighted materials for training purposes remains unclear and varies by jurisdiction. This uncertainty can make AI companies wary of entering into licensing agreements, especially when it’s not clear what legal protections or obligations such agreements would confer in the context of AI training.

Technical Challenges: Identifying the original creators of all the materials used in training datasets can be technically challenging, especially when dealing with data scraped from the internet, where attribution may not be clear or accurate. This uncertainty about the provenance of data complicates efforts to license it legally.

Copyright Infringement Cases Against AI Companies

The Getty Images Case Against Stability AI

Getty Images, a global provider of stock photos, video, and multimedia content, initiated legal action against Stability AI. Getty Images accuses Stability AI of copyright infringement, claiming that Stability AI used millions of Getty’s copyrighted images to train its Stable Diffusion AI model without obtaining a license or permission.

Key Points of Their Lawsuit

At the heart of the lawsuit is the allegation that Stability AI unlawfully used copyrighted materials for training its AI, which could potentially create derivative works that compete with the original copyrighted works.

The case may explore the boundaries of fair use doctrine, examining whether using copyrighted images to train AI constitutes fair use or if it infringes on the copyright holders’ rights. The outcome could have far-reaching implications for photographers, artists, and creators, influencing how AI companies access and use copyrighted materials for training purposes in the future.

Implications of the Case

The Getty Images vs. Stability AI lawsuit is being closely watched by legal experts, tech companies, and content creators for several reasons. First, it could set a precedent for how copyright law applies to AI and the use of copyrighted content in training machine learning models.

Second, the case embodies the tension between fostering innovation in AI development and protecting the rights and livelihoods of content creators. Beyond the legal arguments, the case raises questions about the ethical considerations of using copyrighted works without compensation or acknowledgment.

The Kelly McKernan, Karla Ortiz, and Sarah Andersen Case Against Stability AI, DeviantArt, and Midjourney

Kelly McKernan, Karla Ortiz, and Sarah Andersen, along with other plaintiffs, filed a class action lawsuit against Stability AI (the developer of Stable Diffusion), DeviantArt (which launched an AI-generated art feature), and Midjourney (an independent research lab that offers free and paid image generators). The lawsuit was filed in the United States District Court for the Northern District of California in January 2023.

Key Points of Their Lawsuit

The artists accuse the companies of copyright infringement, alleging that their AI models were trained on vast datasets that included copyrighted images without the artists’ permission. This, they argue, not only violates their copyright but also affects their ability to control and profit from their original works.

The lawsuit underscores concerns that AI-generated images, which can mimic the style of human artists, could undermine the market for original artworks. The plaintiffs argue this could devalue their work and infringe upon their rights, affecting their livelihoods.

Beyond seeking compensation, the lawsuit aims to address the legal gray areas surrounding the use of copyrighted material in training AI. It seeks to clarify the extent to which AI companies can use copyrighted works without explicit permission and the implications of such use for copyright law.

Why This Case Is Significant

This legal battle is particularly significant because it represents one of the first major challenges to how AI companies use copyrighted content to train their models. The outcome could set precedents affecting not only the parties involved but also the broader ecosystem of AI developers, artists, and copyright holders.

It raises crucial questions about the balance between innovation in AI and the protection of intellectual property, potentially influencing future regulations and practices in AI development and deployment.

Results of the Case

Given the evolving nature of both AI technology and copyright laws, the case of McKernan, Ortiz, and Andersen vs. Stability AI, DeviantArt, and Midjourney was closely watched by many in the tech and creative industries for its potential to shape future interactions between AI developers and content creators.

In October 2023, the judge presiding over McKernan, Ortiz, and Andersen’s case sided with the AI company. As Adam Schrader writes in his coverage of the case for Artnet, the judge dismissed claims of copyright infringement on the basis that several artists involved failed to register “Copyrights prior to filing the lawsuit.” However, Andersen can proceed with her case against these companies.

How Will I Know if Generative AI Tools Use My Content Without Permission?

Determining whether an AI model has used your work without permission can be challenging, given the opaque nature of many AI training processes and the vast amounts of data involved. Detecting unauthorized use of your work in AI training sets requires vigilance and sometimes creative use of technology and legal resources.

Given the complexities involved, there’s a growing discussion in the legal, tech, and creative communities about developing more transparent and ethical frameworks for AI development and data use. Thankfully, there are several approaches and indicators that human creators can use to investigate or raise suspicions that their work is being used by generative AI systems in violation of their copyright protection.

Reverse Image Search and Similar Tools

For artists, using reverse image search engines (like Google Images, TinEye, or others specialized in art) can help identify similar images generated by AI. If AI-generated images closely resemble your unique style or specific works, it might indicate that your work was part of the training set.

Authors might find excerpts or themes reminiscent of their unique writing style in AI-generated text. Advanced plagiarism detection tools can help identify similarities, although this can be more nuanced and difficult to ascertain.

AI Model Disclosure and Analysis

Some artificial intelligence companies and research groups release information about their training datasets, methodologies, or the sources of their data. While this transparency isn’t universal, examining available documentation or reaching out directly to the companies for clarification can sometimes yield insights about computer generated works.

Community and Social Media Monitoring

Artists and creators often share their experiences and discoveries through forums, social media platforms, and professional networks. Staying engaged with these communities can help in identifying potential unauthorized use of works, as peers might share similar concerns or notice patterns related to specific AI models.

Legal and Technical Analysis

Professionals in digital forensics and copyright law can offer services to analyze content in the generative AI space and assess potential infringements, although this can be costly. In some jurisdictions, copyright holders can issue formal requests or subpoenas during litigation to compel companies to disclose the contents of their training datasets, offering a legal pathway to ascertain the use of copyrighted materials.

Monitoring AI-Generated Content Platforms

Regularly checking platforms that host AI-generated works can provide clues. Some AI-generated pieces might bear striking resemblances to known works, in style, composition, or content, suggesting the use of those works in training data.

Proactive Measures

Embedding invisible watermarks or unique digital fingerprints in your work doesn’t prevent misuse by companies training digital technologies, but it can make it easier to track and prove unauthorized use. Ensuring works created by you are properly registered can provide a stronger legal status if you ever bring a case to the copyright office.

Design Dash

Join us in designing a life you love.

How Do I Keep Loving Design When 80% of My Job Is Running the Business?

Running a design firm doesn’t have to mean losing touch with design itself. Learn practical ways to protect your creative energy.

Does My Interior Design Firm Need to Niche Down to Scale?

Should your design firm niche down or stay full service? Explore the pros and cons of both, plus a smarter third option.

What’s the Best Way to Collect Google Reviews for My Interior Design Firm Without Bugging Clients?

Stop dreading the “ask.” Learn how interior design firms can collect meaningful Google reviews without feeling pushy.

What’s the Easiest Way to Track Billable Hours in a Small Design Firm?

Time tracking doesn’t have to be tedious. Simplify the process by managing your team, using cloud tools, and connecting hours directly to invoices.

Beyond the Studio: Art Practices That Strengthen a Design Firm

Art practices beyond interior design can sharpen creativity, build cognitive flexibility, and strengthen leadership for design firm owners.

Creative Rituals from Our “Women, In Their Own Words” Interviews

The “Women, In Their Own Words” interviews reveal how deeply personal practices help designers cultivate focus and connect with their purpose.